Madhava HPC Cluster

System Configuration

System Hardware & Configuration

Total number of nodes: 36 (1 + 31 + 2 + 2)

-

Master/Login/Service nodes: 1

-

CPU nodes: 31

-

GPU accelerated nodes: 2

-

Storage nodes: 2

Master/Login/Service Node:1

Madhava HPC cluster is an aggregation of a large number of computers connected through networks. The basic purpose of the master node is to manage and monitor each of the constituent component of Madhava HPC from a system’s perspective. This involves operations like monitoring the health of the components, the load on the components, the utilization of various sub-components of the computers in Madhava HPC. This also works as a login node which used for administrative tasks such as editing, writing scripts, transferring files, managing your jobs and the like. You will always get connected to one of the login nodes. From the login nodes you can get connected to a compute node and execute and interactive job or submit batch jobs through the batch system (SLURM) to run your jobs on compute nodes. For ALL users Madhava HPC Cluster login nodes are the entry points and hence are shared. This node also works as a service node to provide Job Scheduling Services and other services to the cluster

|

Master/ Login/service Nodes : 1 |

|

|---|---|

|

Model |

Lenovo ThinkSystem SR650 |

|

Processor |

2 nos of Intel(R) Xeon(R) Gold 6248 CPU @ 2.50GHz. |

|

Cores |

64 cores |

|

RAM |

192 GB |

|

HDD |

2 TB |

|

|

|

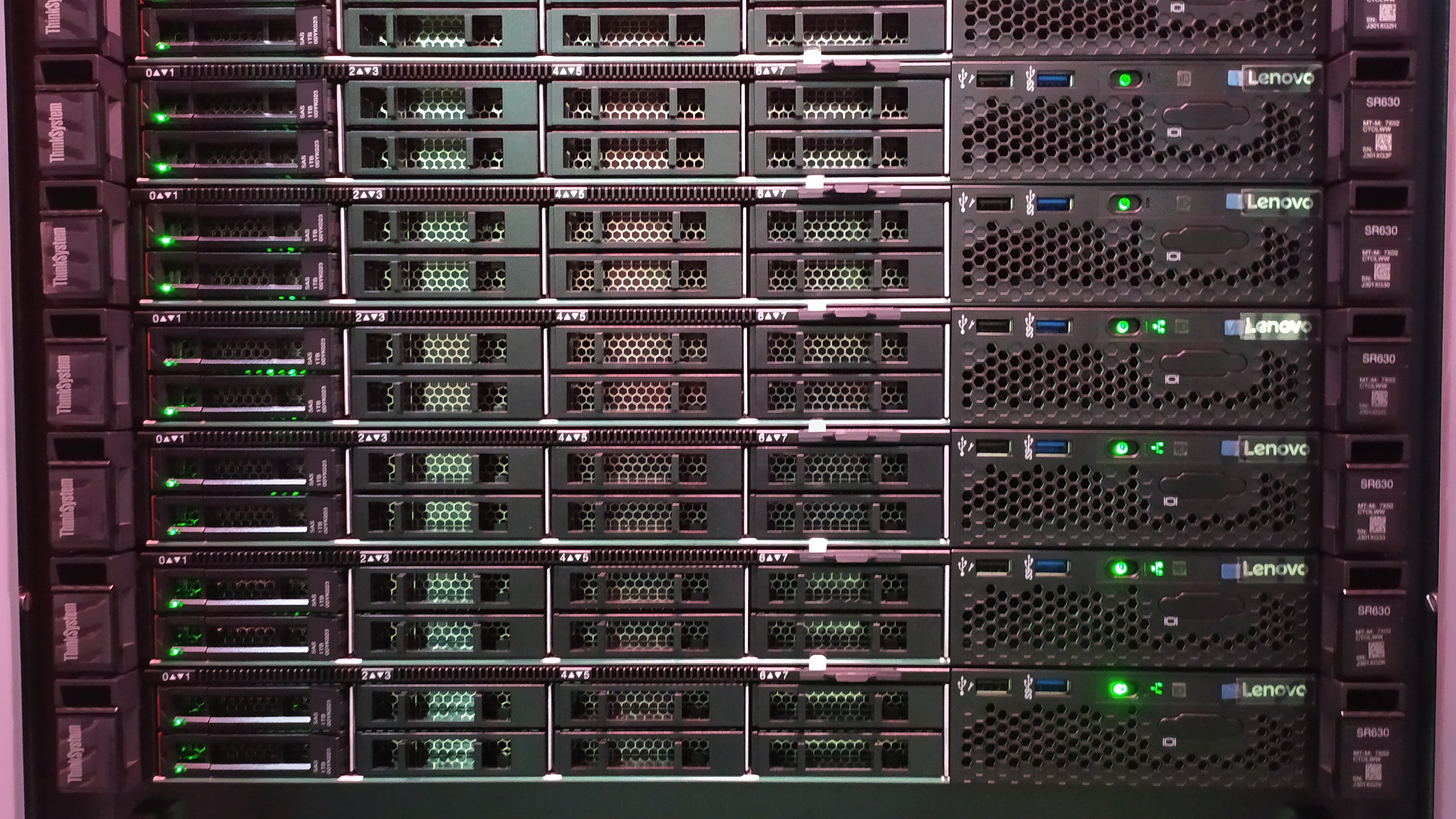

CPU Nodes:31

CPU nodes are indeed the work horses of Madhava HPC. All the CPU intensive activities are carried on these nodes. Users can access these nodes from the login node to run interactive or batch jobs. by users in the aforementioned way.

|

CPU Only Nodes : 31 |

|

|---|---|

|

Model |

Lenovo ThinkSystem SR630 |

|

Processor |

2 nos of Intel(R) Xeon(R) Gold 6248 CPU @ 2.50GHz. |

|

Cores |

40 cores |

|

RAM |

192 GB |

|

HDD |

1 TB |

GPU Nodes:2

GPU compute nodes are the nodes that have CPU cores along with accelerators cards. For some applications GPUs get markedly high performance. For exploiting these, one has to make use of special libraries which map computations on the Graphical Processing Units (Typically one has to make use of CUDA or OpencCL).

|

CPU Only Nodes : 31 |

|

|---|---|

|

Model |

Lenovo ThinkSystem SR650 |

|

Processor |

2 nos of Intel(R) Xeon(R) Gold 6248 CPU @ 2.50GHz. |

|

Cores |

40 cores |

|

RAM |

192 GB |

|

HDD |

1 TB |

|

GPU |

2* Tesla V100-PCIE-32GB per node |

|

GPU cores per node |

2* 5120 |

|

GPU Tensor Cores |

2* 640 |

Storage/ IO nodes:2

Providing the storage service to head and compute nodes using GPFS

|

Storage/ IO nodes:2 |

|

|---|---|

|

Model |

Lenovo ThinkSystem SR630 |

|

Processor |

2 nos of Intel(R) Xeon(R) Gold 6248 CPU @ 2.50GHz. |

|

Cores |

40 cores |

|

RAM |

192 GB |

|

HDD |

1 TB |

Storage Array :2

It's a backend to the IO nodes which is providing storage service to head and compute nodes

|

Storage/ IO nodes:2 |

|

|---|---|

|

Model |

ThinkSystem DE6000H 2 nos of DE Controller with 16 GB, 60 Chasis. |

|

Home Space |

204 TB |

|

HDD |

Scratch Space 60 TB |